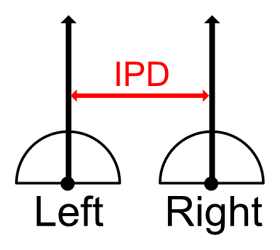

IPD often uses 64 mm.

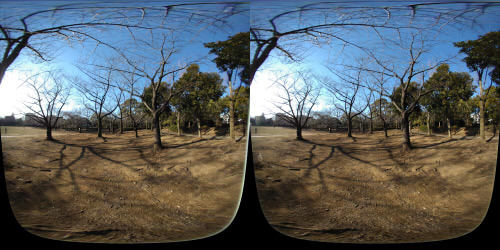

The method of placing the image from the fisheye lens or the image converted into Equirectangular on the left and right is often used for VR180 camera.

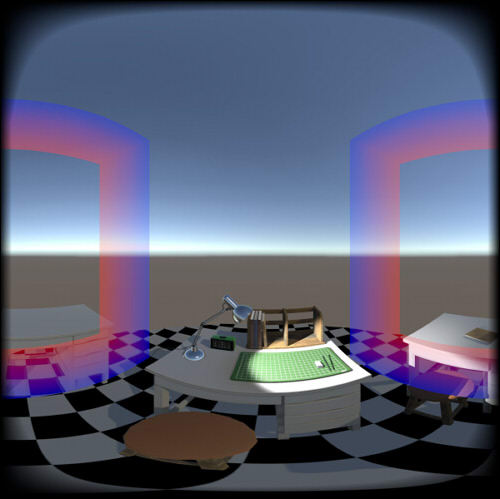

The following is Equirectangular.

In the VR180 format, information such as projection method and arrangement method is given as "metadata" to this image.

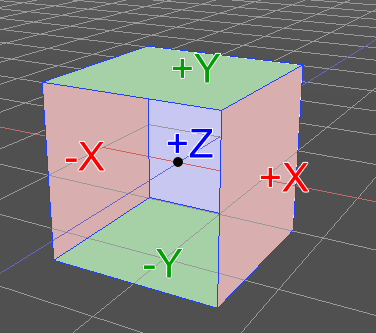

When generating an image of Equirectangular by rendering, we did the following procedure.

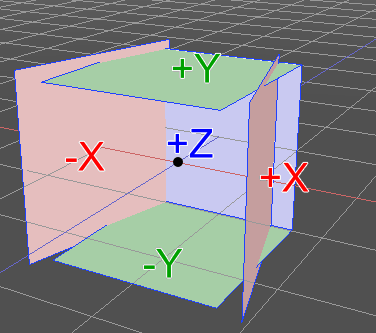

- Place 5 cameras on each side (+Z/-Y/+Y/-X/+X)

- When rendering, it scans hemispherically from the center of the camera and adopts the color when it intersects with the projection plane of each cameras

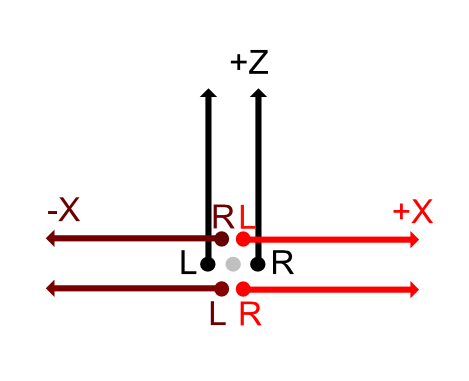

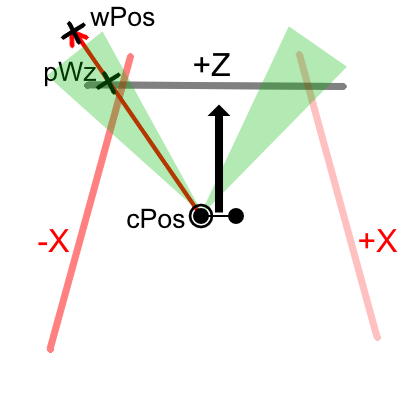

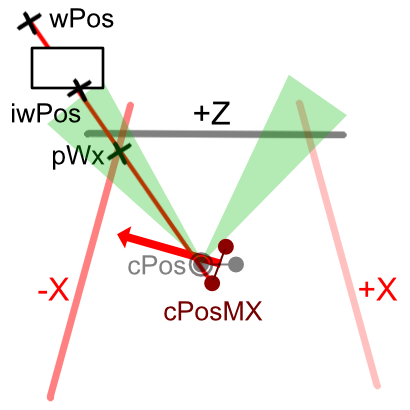

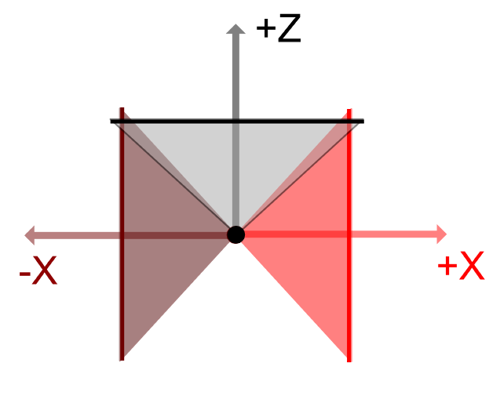

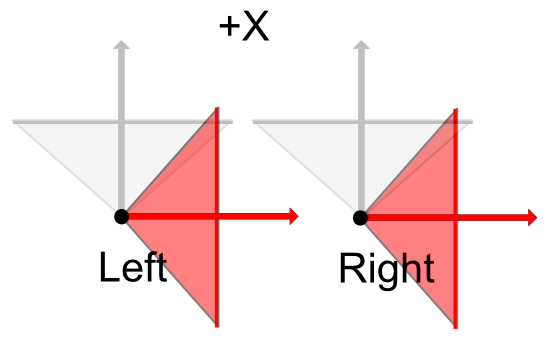

Looking down from above it will be as follows.

Each camera renders with five cameras facing +Z/-X/+X direction, -Y/+Y direction from the camera center.

Hemispherically scan from the camera and adopt the color of the part that intersects the projection plane of the camera.

The field of view is set to 95 degrees instead of 90 degrees, and the part where the boundary part overlaps is corrected so that it looks smooth.

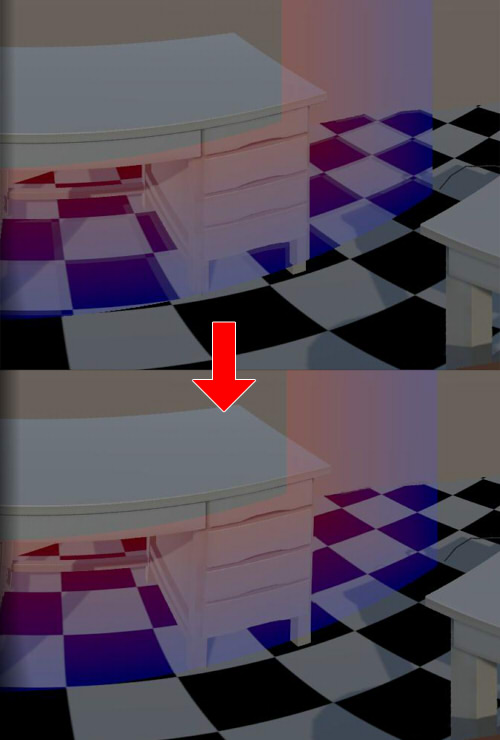

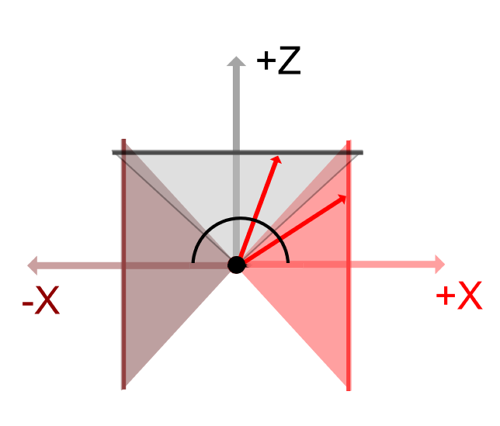

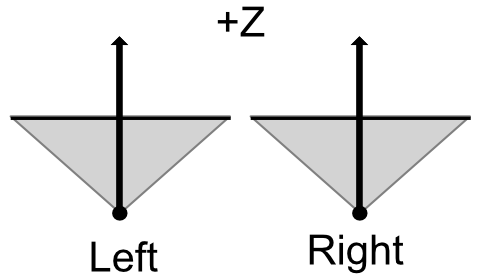

Considering the left and right viewpoints, the +Z direction is parallel and is separated by the IPD distance as follows.

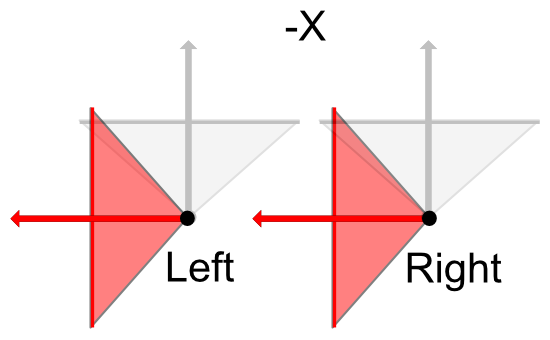

In the -X direction, the position of the camera does not change and it points in the -X direction.

The same is true for +X direction.

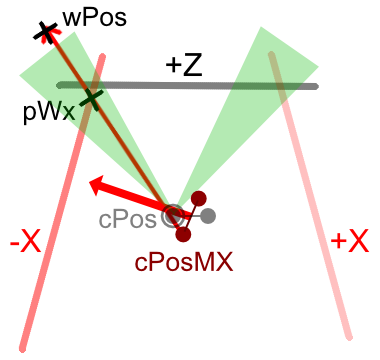

When rendering like the VR180 camera,

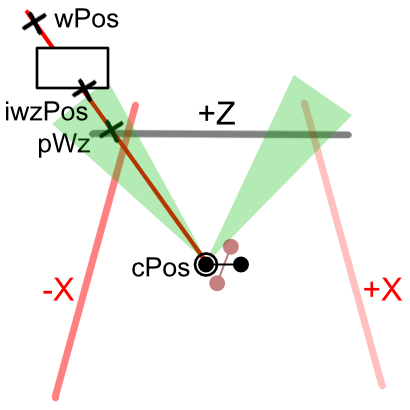

Even if the center of the left and right cameras is oriented in any direction, it is the same position just by the IPD distance.

From this, parallax for IPD is generated for the +Z/-Y/+Y plane, but with respect to the -X/+X plane, the cameras are merely displaced back and forth relative to each other, and parallax does not occur.

When viewing by VR as stereoscopic viewing, parallax is reflected correctly when facing the front and up and down,

It leads to a phenomenon that disparity disappears as turning to the left and right.

This is the same phenomenon even when shooting with only two physical fisheye lenses.

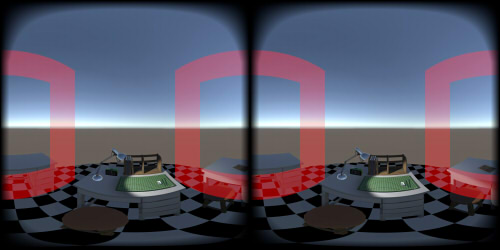

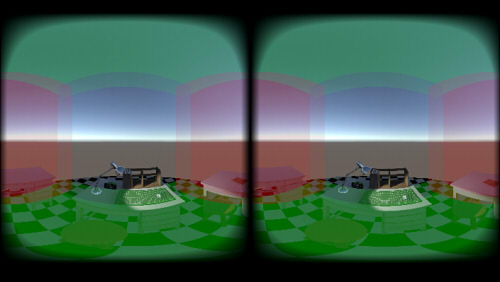

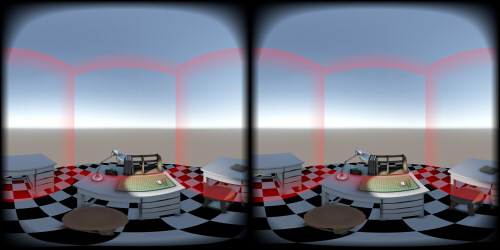

The rendering result is Equirectangular as follows.

For the sake of clarity, -X/+X is red, and -Y/+Y is green.

In the case of this rendering, it is not necessary to stitch the boundary part because the positions of the left and right cameras are the same.

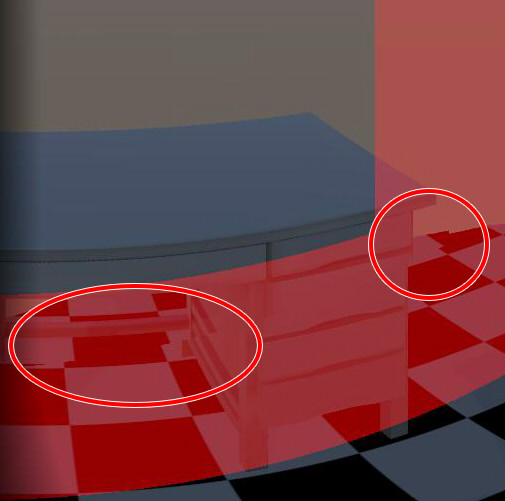

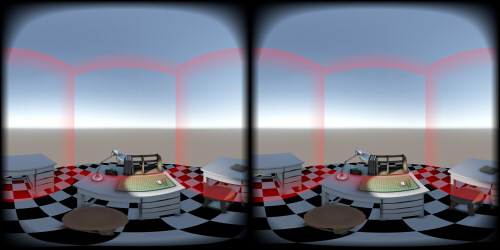

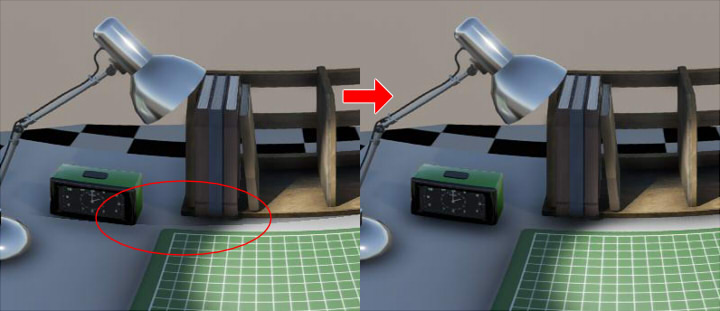

Correction of boundary part

If Post Processing is assigned to each cube map faces,

there will be portions that do not connect at the boundary of each faces.

To alleviate this, the boundary part was corrected by giving a weight value.

Below, the boundary part is made red.

The boundary part is given a fixed position (angle by spherical projection) in advance.

If you simply synthesize the cube map faces,

When the influence of Ambient Occlusion or Bloom is large, breaks in the boundary portion are visible.

In order to solve this, interpolate by giving a weight value to the boundary so that the boundary is not conspicuous.

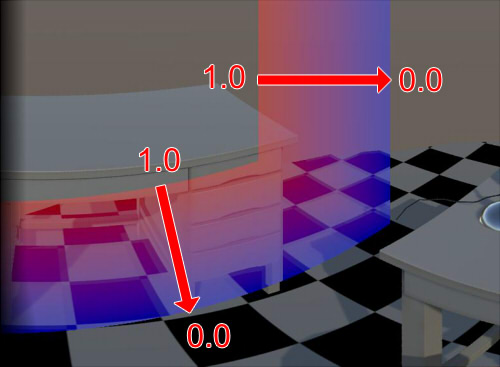

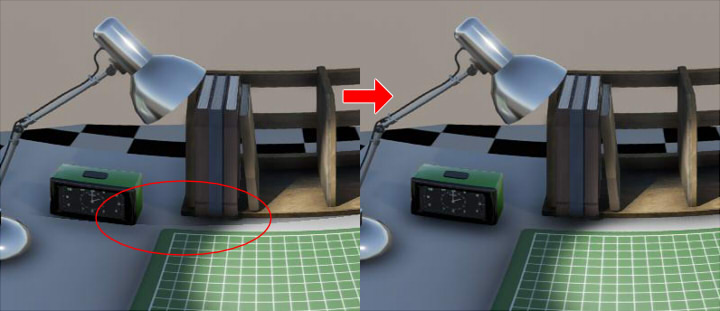

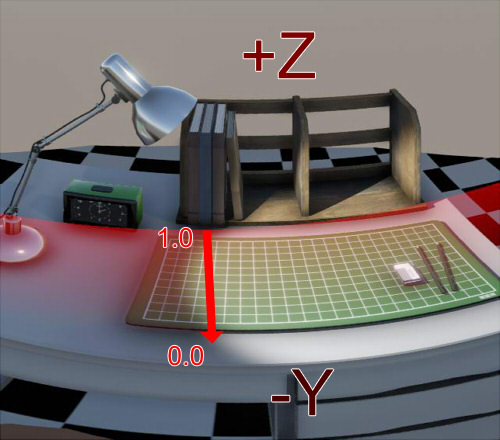

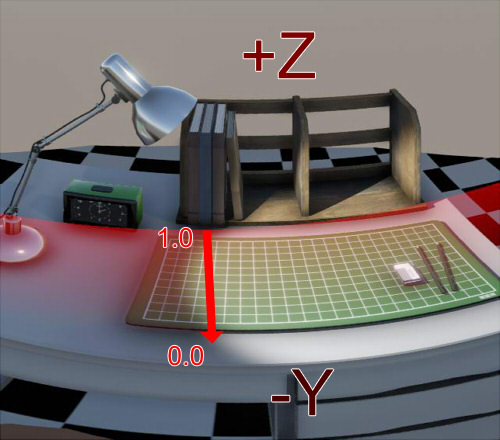

In the case of the lower image, the weight value approaches 1.0 at the boundary where it is in contact with the +Z plane,

and the portion that is in contact with the -Y plane approaches 0.0.

If it is not a boundary, the weight value is 0.0.

In the boundary part, since the +Z/-Y planes overlap with each other,

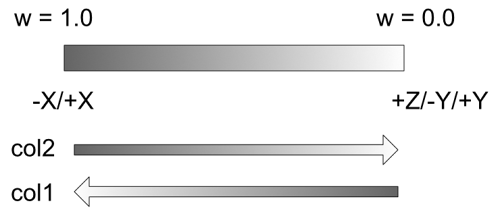

You can acquire the pixel color (col1) on the +Z plane and the pixel color (col2) on the -Y plane in advance.

If the weight value is w, it is corrected with the following formula.

-Y/+Y/-X/+X planes in contact with +Z plane,

-X/+X planes in contact with -Y plane,

-X/+X planes in contact with +Y plane,

Give each boundary part to correct.

By doing this, it relaxes the color difference at the border of Post Processing to some extent.

there will be portions that do not connect at the boundary of each faces.

To alleviate this, the boundary part was corrected by giving a weight value.

Below, the boundary part is made red.

The boundary part is given a fixed position (angle by spherical projection) in advance.

If you simply synthesize the cube map faces,

When the influence of Ambient Occlusion or Bloom is large, breaks in the boundary portion are visible.

In order to solve this, interpolate by giving a weight value to the boundary so that the boundary is not conspicuous.

In the case of the lower image, the weight value approaches 1.0 at the boundary where it is in contact with the +Z plane,

and the portion that is in contact with the -Y plane approaches 0.0.

If it is not a boundary, the weight value is 0.0.

In the boundary part, since the +Z/-Y planes overlap with each other,

You can acquire the pixel color (col1) on the +Z plane and the pixel color (col2) on the -Y plane in advance.

If the weight value is w, it is corrected with the following formula.

float3 col = col2 * (1.0 - w) + col1 * w;

-X/+X planes in contact with -Y plane,

-X/+X planes in contact with +Y plane,

Give each boundary part to correct.

By doing this, it relaxes the color difference at the border of Post Processing to some extent.